Summary: Artificial intelligence is increasingly being positioned not just as a technical tool, but also as a conversational companion, capable of providing dialogue, guidance, and even simulated empathy. In the field of healthcare and medical imaging, AI systems are already enhancing diagnostic accuracy, improving workflow, and helping inform patients about complex procedures. Yet the question remains whether AI thought companions—digital entities designed for conversation—can ever replace the authenticity of human connections. This article examines the potential and limitations of AI companions, with a particular focus on their application in medical imaging departments, where trust, empathy, and effective communication are crucial.

Keywords: AI thought companions, human relationships and AI, artificial empathy, digital companionship, AI and medical imaging, future of human connection.

The Emergence of AI Companions in Medical Imaging

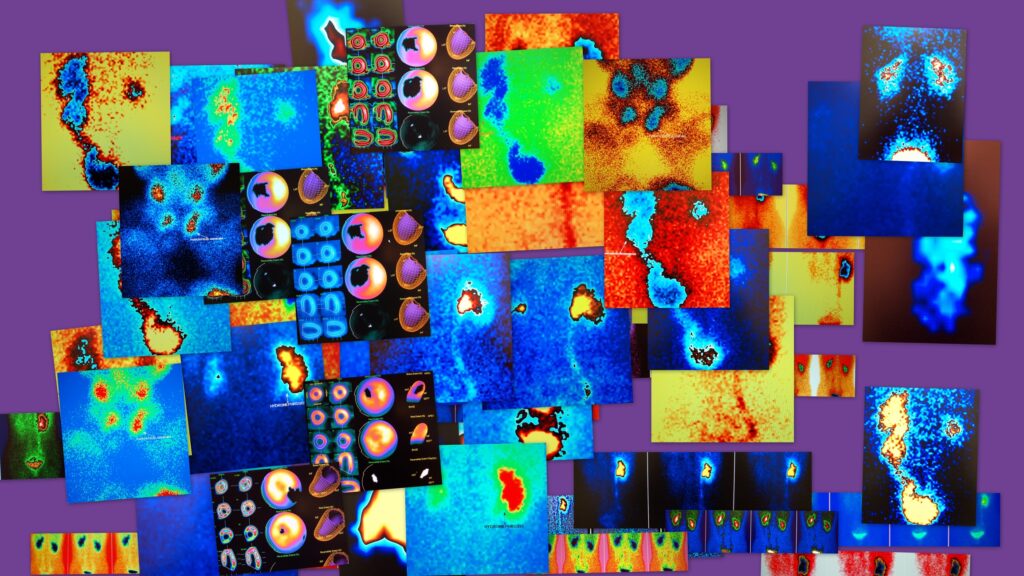

The rise of AI companions parallels the adoption of AI in medical imaging. While algorithms already excel at detecting tumours on CT scans, quantifying cardiac function on MRI, and identifying subtle abnormalities on PET images, conversational AI is being trialled as a companion technology.

For instance, several hospitals in Europe are piloting AI-driven “virtual assistants” that interact with patients before imaging appointments. These systems explain how to prepare for procedures, answer questions about radiation exposure, and provide reminders about fasting or medication schedules. In oncology centres, some AI companions are being tested to help patients understand the role of imaging in treatment monitoring, offering simple, clear explanations tailored to different levels of health literacy.

These developments suggest that AI companions may become more integrated into imaging services, where anxiety and uncertainty often dominate the patient experience.

Human Connection and the Patient Journey

Despite technological progress, the importance of human connection in medical imaging cannot be overstated. Patients undergoing scans often describe feelings of fear, claustrophobia, or isolation. While AI systems can provide reassurance in text or voice form, radiographers play a unique role in calming patients through eye contact, tone, and presence.

Consider the MRI suite: the patient enters a noisy, enclosed machine and must remain still for extended periods. An AI thought companion could prepare the patient by simulating the sounds of the scanner or offering real-time encouragement through headphones. Yet the empathetic words of a radiographer—“you’re doing really well, just one more minute”—carry a weight that stems from lived humanity.

These interactions go beyond words; they involve trust in another person’s skill and care. A machine may provide the same message, but the absence of reciprocity and authenticity makes it a different experience altogether.

Artificial Empathy in Healthcare Contexts

Artificial empathy is at the centre of AI companionship. In medical imaging, AI companions are being developed to detect anxiety in patient speech and to adjust responses accordingly. For example, a patient expressing nervousness about radiation could be reassured by an AI companion explaining dose safety in lay terms.

In paediatric imaging, pilot projects have used animated AI avatars to talk children through procedures. These companions can explain, in child-friendly language, why they need to stay still for an MRI or what the scanner sounds will be like. Early studies suggest such tools can reduce sedation rates, highlighting the potential of AI in creating calmer experiences.

However, authenticity remains the missing piece. The AI does not share the child’s nervousness or the parent’s concern. It mirrors patterns it has been trained on. While this may reduce stress, it cannot replace the warmth of a radiographer kneeling beside the child, offering encouragement drawn from empathy rather than programming.

Mental Health Support in Imaging Departments

AI thought companions are also being explored for mental health support in medical imaging pathways. Anxiety about diagnostic results is common, and AI companions could provide mindfulness exercises, guided breathing, or cognitive behavioural therapy techniques while patients wait for scans.

For example, in some pilot schemes, patients waiting for PET scans are offered access to a digital companion on a tablet. The companion explains what will happen during the procedure, provides stress-reduction exercises, and answers common questions. Patients report feeling calmer and better informed, which improves cooperation during the scan.

Yet limitations are evident. A patient receiving a life-changing diagnosis, such as the discovery of metastatic cancer, cannot rely on AI companionship for genuine support. In these moments, empathy and compassion from clinicians are irreplaceable. While AI can provide structure and distraction, it cannot share in the human weight of such experiences.

Authenticity, Trust, and Clinical Care

Trust is fundamental in healthcare. Patients place their confidence in radiographers, radiologists, and oncologists because they perceive authentic care and professional responsibility. This trust is fragile, and replacing human interactions with AI companions risks undermining it.

In diagnostic imaging, where results can carry profound consequences, patients need to feel that they are more than just data points. A conversation with a radiologist who carefully explains the findings of a CT scan carries authority and humanity. If an AI companion delivers the same information, even flawlessly, many patients may feel uneasy, aware that they are engaging with an entity incapable of proper understanding.

Authenticity matters not just emotionally but ethically. Patients deserve to know whether they are interacting with a person or a machine, and to be reassured that their vulnerability is met with genuine empathy.

Technology, Isolation, and the Clinical Setting

Imaging departments often involve long waits and minimal interaction. Patients may feel alone while preparing for procedures or during recovery. AI companions could fill some of this void, providing distraction and reassurance. For example, an AI companion might talk patients through a relaxation exercise while they wait for their MRI slot, reducing perceived waiting times and easing stress.

However, over-reliance on AI risks creating clinical environments where human contact is reduced to a technical necessity, with empathy delegated to machines. This could leave patients feeling that their care is efficient but impersonal. In a field as sensitive as medical imaging, where the stakes often involve cancer detection or neurological disease, the loss of human touch would be a significant step backwards.

Ethical Considerations in Imaging Practice

Introducing AI thought companions into medical imaging raises pressing ethical questions. One is dependency. Patients who find comfort in digital companions during imaging journeys may prefer these interactions to those with staff, which could diminish human relationships in care.

Privacy is another concern. Conversations with AI companions often involve sensitive personal and medical information. If these dialogues are stored or analysed, there are risks to confidentiality. For example, a patient confiding fears about their cancer treatment to an AI system may not realise that their data could be used for commercial or research purposes.

There is also a broader cultural issue: should healthcare invest in technologies that simulate human connection, or should resources be directed towards strengthening human-centred care? Medical imaging services already face pressure to balance efficiency with compassion, and the introduction of AI companions could tip this balance in unintended ways.

Case Studies: AI in Radiology Departments

Recent initiatives illustrate both the promise and the limits of AI companionship in imaging:

- Paediatric MRI guidance: Children at specific European centres interact with AI avatars before entering scanners. These avatars explain the process in playful terms, which has reduced the need for sedation. Yet radiographers still provide personal reassurance, highlighting that AI works best as a supplement.

- Virtual oncology assistants: Some cancer centres use AI chat companions to support patients awaiting imaging results. They explain timelines and answer common queries about PET or CT scans. While patients appreciate the availability of these systems, they consistently report that final conversations with doctors remain irreplaceable.

- Elderly imaging patients: Pilot projects in geriatric imaging units have trialled AI companions to reduce confusion during complex preparation steps, such as fasting before abdominal scans. The systems improved compliance, but could not address the loneliness many elderly patients expressed, which required human contact.

These case studies underline a pattern: AI companions can enhance efficiency and reduce stress, but they cannot replace the unique qualities of human care.

Towards Integration, Not Replacement

The future of AI companions in medical imaging is likely to be integrative. They can prepare patients, reduce anxiety, and free clinicians to focus on technical expertise and empathetic conversations. Used wisely, they could strengthen the overall patient experience.

However, the notion that AI thought companions might replace human connection risks undermining the essence of healthcare. The authenticity of human interaction, particularly when delivering diagnoses or supporting patients through complex procedures, is irreplaceable. AI should be seen as an assistant, not a substitute.

Conclusion

AI thought companions are opening new possibilities in healthcare and medical imaging, from easing anxiety before scans to offering explanations of complex procedures. They can simulate empathy, provide reassurance, and improve efficiency. Yet the essence of human connection—authenticity, reciprocity, and shared vulnerability—remains beyond their reach.

The challenge for medical imaging is to use AI companions as complements to human care, ensuring that efficiency does not come at the cost of compassion. Patients need both technological excellence and genuine human presence. To allow artificial companionship to replace authentic human connection would risk turning care into simulation, leaving patients informed yet emotionally unsupported.

Disclaimer

This article is intended for informational and educational purposes only. It does not provide medical advice, diagnosis, or treatment and should not be relied upon as a substitute for professional medical advice or treatment. Readers should always consult with qualified healthcare professionals for advice on medical conditions, imaging procedures, or mental health concerns. While AI technologies are discussed in this article, their applications in medical imaging and healthcare are still evolving and should be considered with caution. The views expressed are general in nature and do not represent the official position of any medical institution or regulatory body.

You are here: home » diagnostic medical imaging blog »