Artificial intelligence (AI) and the study of algorithms, known as machine learning, will analyse complex medical imaging data from patients.

Diagnostic medical imaging through the application of artificial intelligence

Alan Turing features on the back of a new £50 banknote he not only cracked the Enigma code during World War II but was a pioneer of machine learning. In 1950, he came up with the Turing test, published in a paper called ‘Computing Machinery and Intelligence.‘ Turing proposed that a method of inquiry in the application of Artificial Intelligence (AI) must be able to determine if a computer system is capable of thinking like a human being. Moreover, Artificial Intelligence (AI), a phrase first coined by the computer scientist John McCarthy in 1955: also predicted that creating an AI machine would require ‘1.8 Einstein’s and one-tenth the resources of the Manhattan Project.’

However, according to IBM, the four fundamental pillars of a trusted AI system are:

Fairness: The input training data and models used in AI systems should not be biased towards certain groups.

Robustness: The data and models in the AI system must be secured and not compromised.

Explainability: The output data from the AI system should be in a logical format so that the end-user can understand.

Lineage: AI systems should be audited and all details recorded.

For several decades, researchers have been working to develop methods that capture the reasoning used by physicians to arrive at diagnoses. The application of Artificial Intelligence (AI) in the clinical setting started to expand in the 1980s and 1990s through Bayesian networks which are artificial neural networks and hybrid intelligent systems. For example, in 1986, Dxplain was developed by the Massachusetts General Hospital in conjunction with Harvard Medical School Laboratory of Computer Science in order to create a diagnostic decision-support system. The DXplain database included 5,000 clinical indexes associated with more than 2,000 diseases. Each disease description has at least ten current references. DXplain was an early electronic textbook and medical reference system.

Since, 1993, the Barnes Jewish Hospital in St. Louis has been using the GermWatcher electronic microbiology surveillance application to detect and investigate hospital-acquired infections. In 2013, UK Babylon NHS services encompassed more than 40,000 registered user-patients who provided remote consultations with physicians via text and video messaging through its mobile application.

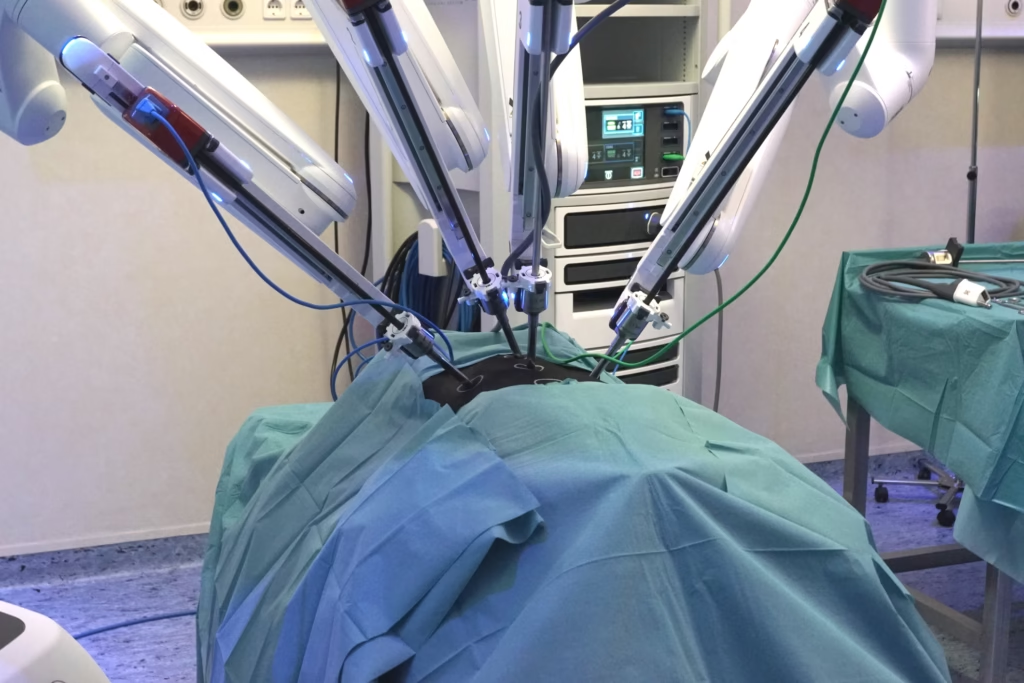

The Da Vinci robotic surgical system developed by the company Intuitive Surgical Inc. was given FDA approval in 2000. The robot aims to facilitate surgery using a minimally invasive approach and is controlled by a surgeon from a console. It is used in the field of surgery, especially in urological and gynaecological surgeries. Since 2018, Boston children’s hospital has been working on a web interface-based AI system. These chatbots provide advice to parents regarding the symptoms of ill children and whether they require a doctor visit.

The National Institute of Health (NIH) created an AiCure App, which monitors the use of medications by the patient via smartphone webcam access and hence reduces the rate of non-adherence.

In 2016, the Digital Mammography DREAM Challenge was carried out on several networks of computers. The aim was to establish an AI-based algorithm by reviewing 640,000 digital mammograms. In conclusion, this study demonstrated that AI has potential, but it is unlikely that AI will replace doctors outright.

In 2018, the Food and Drug Administration approved the first algorithm to make a medical decision without the need for a physician to review an image. The algorithm was developed by IDx Technology and analyses retinal images to detect diabetic retinopathy with 87% accuracy.

using these systems won’t make a bad doctor a good doctor, but might make a good doctor a better doctor.

The healthcare revolution has begun in the area of diagnostic medical imaging through the application of Artificial Intelligence (AI). This revolution will include new technologies for image acquisition and processing. Ultimately, AI will lead to improvements in treatment plans, data storage and data mining (turning raw data into useful information), especially in the future role of the radiologist. Medical AI applies computer technology by detecting a possible association in a dataset of clinical situations to form a diagnosis and provide a suitable treatment plan for the patient and therefore create a more favourable prognosis.

Artificial intelligence (AI) and the study of algorithms, known as machine learning, will analyse complex medical imaging data from patients. These high-resolution images are mostly obtained from X-rays, computed tomography (CT) scans and magnetic resonance imaging (MRI). However, these images require time to evaluate. Consequently, AI can be a valuable asset for the radiologists to increase the output and improve accuracy.

Clinical application of artificial intelligence in radiology

Thoracic computed tomography (CT) is considered the gold standard for nodule detection in lung pathology. Artificial Intelligence (AI) can be applied to the screening process to automatically identify these nodules and categorise them as benign or malignant. However, in comparison to standard chest radiography, CT is significantly more costly and even a low-dose CT requires higher radiation dosages.

Multiphase contrast-enhanced magnetic resonance imaging (MRI) is the current modality of choice for the characterisation of liver masses incidentally detected on imaging. Contrast-enhanced computed tomography (CT) is the basis for the screening of liver metastases. The description of a liver mass by CT and MRI primarily relies on the dynamic contrast-enhancement characteristics of the mass in multiple phases. MRI and CT imaging can feature both benign and malignant liver masses and this coupled with relevant clinical information allows reliable characterisation of most liver lesions. Some cases have nonspecific or overlapping features that may present a diagnostic dilemma. Artificial Intelligence (AI) may aid in characterising these lesions as benign or malignant and therefore prioritise follow-up evaluation for patients with these lesions.

Screening mammography is technically challenging to interpret expertly. Artificial Intelligence (AI) can assist in the interpretation, in part by identifying and characterising micro-calcifications which are small deposits of calcium in the breast.

Artificial intelligence and brain imaging

A brain tumour is an abnormal mass of tissue resulting from an uncontrollable growth of cells. There are more than 150 known intracranial tumours that are broadly classified as benign (e.g. chordomas, gangliocytomas) or malignant (e.g. medulloblastomas, glioblastoma multiforme), primary (craniopharyngiomas, pituitary tumours) or metastatic. Metastatic tumours in the brain affect 25% of patients with cancer and this correlates to about 150,000 people a year compared to 40% of people with lung cancer who will develop metastatic brain tumours.

Artificial Intelligence (AI) can assist neurosurgeons by evaluating the type of brain tumour during surgery. In a clinical trial, the researchers combined stimulated Raman histology (SRH) and deep convolutional neural networks (CNNs) – the way neural networks in the human brain process information – to predict the diagnosis of the patient in the clinical setting. These CNN systems are qualified by analysing over 2.5 million SRH images which are classified into malignant glioma, lymphoma, metastatic tumours and meningioma brain tumours. The CNN system was validated using half of the 278 brain tissue samples and a pathologist analysed the other half. The type of tumour was concluded in a few minutes using CNN compared to the pathologist who would take much longer to ascertain a diagnosis. It is worth noting that the CNN system gave 95% accurate results compared to 94% by the pathologist. During the analysis of the samples, CNN made a correct diagnosis in all 17 cases that a pathologist got wrong. However, the pathologist got the right answer in all 14 cases in which the machine made a wrong diagnosis and consequently, there is a need for machine medicine to work in conjunction with pathology.

Stimulated Raman histology (SRH) is the cause of the Raman effect. The Raman effect is caused when the scanning laser emits photons onto a sample and generates a scattering pattern which can be detected by a 2-D microarray. Therefore, the application of SRH to biological tissue produces a real-time histopathological image. The advantage of this technique over traditional pathology is that the biological sample does not have to undergo freezing to acquire the pathology results. SRH allows for the fast, high-resolution acquisition of structural information through the generation of spectral images.

Hence, SRH can differentiate between the vibration of lipids, proteins and nucleic acids when exposed to the laser. The generated clinical image is observed through the microscope to provide high-resolution without modification (e.g. freezing) during a traditional pathology examination.

The deep learning process in computers involves recognising complex relationships when analysing large data sets. This process results in a network consisting of layers of information to decide on the diagnosis of a brain tumour.

The studies have demonstrated the powerful combination of SRH and AI in the real-time predictions of a patient’s brain tumour diagnosis. This approach allows rapid surgical decision-making, especially when expert neuropathologists are hard to find.

Artificial Intelligence (AI) facilitates diagnostic predictions; for example, BrainScan provides automatic search for similarly computed tomography (CT) and magnetic resonance imaging (MRI) scans in large data sets. The approach works by searching medical cases and abstracting the correct information from the database consisting of CT and MRI scans to help with the diagnosis. The retrieved scans will be obtained in a matter of minutes so the physicians can make a more accurate diagnosis.

Radiation Oncology – Radiation treatment planning can be automated by segmenting tumours for radiation dose optimisation. Furthermore, assessing responses to treatment by monitoring over time is essential for evaluating the success of radiation therapy efforts. Artificial Intelligence (AI) can perform these assessments, thereby improving accuracy and speed.

Cardiovascular disease

A leading cause of mortality in the world is cardiovascular diseases (CVD) which accounted for 17.6 million deaths in 2016. Therefore, it is crucial to measure the various structures of the heart to determine if a person will develop cardiovascular disease. Also, it would be useful to have a clearer understanding of the early symptoms of CVD in order to propose a proper treatment plan for the patient.

Research into automated chest X-ray analysis reported in 1977, used a computer system to extract optical and digital measurements from the scans to classify the severity of lung disease. This set-up consists of a film transportation system, a digital image scanner and an RSI Fraunhofer diffraction pattern sampling unit. The system can be utilised in several diseases areas such as lung disease, heart disease and cancer. For example, results have indicated higher accuracy rates for pneumoconiosis (interstitial lung diseases) comparable to visual readings of the films by expert radiologists.

The advantage of using automated chest X-rays is in the detection of abnormalities. This approach would lead to faster decision-making and fewer diagnostic errors. Automated chest X-rays can also be used as a primary screening tool for cardiomegaly (enlarged heart) which is a marker for heart disease.

Atrial fibrillation

Atrial fibrillation is a heart condition that causes an irregular heart rate. It can be asymptomatic and is associated with stroke, heart failure and death. However, the existing screening methods for atrial fibrillation requires prolonged monitoring. In these cases, the application of machine learning is used to identify patients with atrial enlargement by analysing chest X-rays. This approach would rule out other cardiac or pulmonary problems and provide suitable treatment plans for individual patients. Also, AI could automate other measurements such as carina angle measurements, pulmonary artery diameter and aortic valve analysis. The application of AI tools for the analysis of imaging data could help in the evaluation of specific muscle structures such as the thickening of the left ventricle wall. These AI tools could also extend to changes in blood flow through the heart and arteries.

Degenerative neurological diseases

The four types of motor neurone disease (MND) depends on the type and extent of motor neurone involvement and the location of symptoms within the body. These types include progressive muscular atrophy (PMA), amyotrophic lateral sclerosis (ALS), primary lateral sclerosis (PLS) and progressive bulbar palsy (PBP). However, there is no medical cure for MND and this is a devastating diagnosis for patients. Medical imaging studies are used to diagnose ALS by reviewing if specific lesions are the underlying cause.

In some cases, there may be lesions that mimic the disease and give a false positive. The development of biomarkers aims to increase the accuracy of MND diagnoses. For example, blood oxygen level-dependent (BOLD) functional magnetic resonance imaging (fMRI) can detect areas of neuronal and synaptic activation when responding to experimental stimuli.

Currently, the technique manual segmentation and quantitative susceptibility mapping (QSM) assessments are used to investigate motor cortex function. For example, the whole-brain landscape of iron-related abnormalities in ALS can be assessed using the in vivo MRI technique of QSM. Whole-brain MRI-QSM analysis is sensitive to tissue alterations in ALS that may be relevant to pathological changes.

The QSM process is time-consuming and automation of this procedure with machine learning will contribute to the development of imaging biomarkers. The more advanced algorithms will be able to evaluate images that indicate evidence of ALS or PLS. Computer-assisted therapeutic management is involved in selecting the correct medication and the optimisation of dosages. This approach is also essential to decide the course of therapy needed for the patient. This system aims to decrease the adverse effects of hypersensitivity reactions and reducing costs. Also, it can change the method of data interpretation for patients with neurodegenerative disorders.

The integration of AI into the clinical setting is assisting healthcare workers with the optimisation of the treatment plan towards solving complex disorders. These technology platforms help diagnose epilepsy, stroke, ALS and SCI diseases, Alzheimer’s disease, and Parkinson’s disease. For example, AI-based clinical and decision-making processes can monitor neurodegenerative functions resulting in an effective diagnosis on a continual basis. Dynamic knowledge repositories are linked to AI systems to train algorithms from the vast collection of electroencephalography and electromyography reports; especially in obtaining real-time clinical data from patient’s specific problems.

The introduction of AI and machine learning into the clinical setting will change the interaction between the patient and healthcare professional. The development of non-invasive and low-cost support tools involving computer-aided diagnosis will support clinicians in the management of patients with Parkinson’s disease and Alzheimer’s disease.

The early stages of these degenerative neurological diseases produce exceptionally few observational changes in the patient and therefore do not provide enough information for early diagnosis. Therefore, in these cases, predictive models based on fMRI and diffusion-weighted imaging can be used to detect tiny changes in any disease patterns that can be missed by the healthcare professional.

Convolutional neural networks and diabetic retinopathy

Approximately 420 million people worldwide are diagnosed with diabetes mellitus and approximately 33% will be diagnosed with diabetic retinopathy (DR) – a chronic eye disease that can progress to irreversible vision loss. Therefore, the early detection of DR relies on skilled readers and is both labour and time-intensive. Machine medicine has been applied to the automated detection of diabetic retinopathy. In addition, the use of convolutional neural networks (CNNs) on colour fundus images for the recognition task of diabetic retinopathy staging. These network models achieved metric test performance comparable to baseline literature results, with validation sensitivity of 95%. Automated detection and screening offer a unique opportunity to prevent a significant proportion of vision loss in the population. Medical images are crammed with subtle features that can be crucial for diagnosis. These images have been optimised to recognise macroscopic features which are present in the ImageNet dataset. Overall, the aim is to improve the recognition of mild disease forms and to transition to more challenging cases with the overall benefit of multi-grade disease detection.

Skin cancers

Skin cancer is the most prevalent form of cancer classified in the following group types: basal and squamous cells; melanoma; Merkel cells; lymphoma and Kaposi sarcoma. Therefore, it is essential to identify which form of skin cancer the patient has to receive the right treatment plan. In the US, more than 9,500 people are diagnosed with skin cancer every day. At least two people die of skin cancer every hour.

However, the most common forms of skin cancer are visually diagnosed. This process consists of an initial clinical screening, followed by dermoscopic analysis and for obtaining a biopsy for histopathological examination.

AI systems could play a role in the diagnosis of the type of skin cancer by the automated classification of skin lesions. The deep convolutional neural networks (CNNs) approach may be able to distinguish between the different groups of skin cancers. The CNN was uploaded with a dataset of 129,450 clinical images consisting of 2,032 different diseases. This system was validated against the expertise of 21 dermatologists by comparing the most common skin cancers keratinocyte carcinomas and benign seborrheic keratosis. The latter group was between the deadliest skin cancers malignant melanomas and benign nevi. The CNN results were just as good as the expert opinion in the diagnosis of the type of skin cancer. This study confirmed that AI was able to classify the type of skin cancer with the same ability as a qualified dermatologist. AI systems are starting to be incorporated into mobile devices and these deep neural networks will be able to reach patients who cannot see a dermatologist face-to-face.

CheXpert pneumonia

Researchers at Stanford University have developed an AI system called CheXpert that can detect pneumonia in chest X-rays within 10 seconds compared to a radiologist that could take 20 minutes to make the same diagnosis. Therefore, a rapid diagnosis means that the patient can undergo treatment for pneumonia without delay. CheXpert is a fully integrated automation system that can interpret chest X-rays based on AI.

In the clinical setting, CheXpert was able to accurately analyse chest X-rays and give a rapid diagnosis of pneumonia to the patient, which was confirmed by the healthcare professional. To train the AI component of CheXpert, the team provided the system with 224,316 chest radiograph files of 65,240 patients. The model was further perfected by reading 6,973 more images from several hospitals.

Multiple sclerosis

Multiple sclerosis (MS) is the most common form of inflammatory demyelinating disease of the central nervous system and affects about 2.5 million worldwide. In 2020, Public Health England released new MS prevalence data based on GP records from 2018. This data indicated that the number of people with MS in the UK was 131,720 adults and 250 children.

Researchers at UCL and King’s College London have applied MRI to assist in the diagnosis of MS. This dynamic imaging modality is capable of detecting multiple lesions and new lesions on follow up scans. Therefore, AI systems based on MRI can be used to help in the diagnosis of relapsing-remitting MS and this was demonstrated by analysing the brain’s response to natalizumab (brand name is Tysabri) treatment. The patient MRI scan was compared to the AI system containing a dataset of MRI brain scans. The AI system generated a result by extracting the information from the patient MRI scan and comparing it against the dataset. The aim is to see what changes were taking place in the white and grey matter of the brain. This approach demonstrated higher sensitivity than conventional analysis of brain scans in the diagnosis of MS.

Conclusion

Artificial Intelligence (AI) is becoming established throughout medical imaging, especially in the diagnostic area of mammography, CT scanners and digital chest X-rays. Since, the discovery of X-rays by Wilhelm Conrad Röntgen 125 years ago, this high-energy electromagnetic radiation has changed the way we look at the human body. In the UK there is currently a shortage of radiologists. In 2018, hospitals reported 379 vacant consultant radiologist posts across the UK with 61% being unoccupied for at least a year.

Radiologists need to be aware of the basic principles of machine learning and deep learning systems, especially datasets. However, radiologists do not have to be computer scientists, but they require knowledge on how to communicate between data scientists and understand the output from these AI systems. In the future, radiologists may be replaced by robot radiologists: however, that does not mean that the radiologist will become obsolete, it just means there may not be as many in requirement.

AI will enhance the future role of the radiologist due to the ability of algorithms to replicate the skills of radiologists. On the side of caution, if the radiologist embraces AI, they may eventually replace the ‘traditional’ radiologist. The essence of AI algorithms is to spot detail on the medical images and to devise new ways of interpreting these medical images which the radiologist does not yet fully understand. Also, AI systems could be used to examine medical records and determine if a patient does require a scan. However, some medical imaging modalities are overused; for example, at least 80 million CT scans are performed every year in the US.

The radiologist has to accept AI systems that are currently entering the clinical setting and have the ability to reduce patient waiting time to receive treatment. Also, AI will reduce the workload of the radiologist and might eventually lead to a reduction in human radiologists. However, in the 1990s, computers were used to read radiological scans using computer-assisted diagnosis (CAD) to detect breast cancer in mammograms. At the time CAD technology in hospitals resulted in many errors due to the difficulties in using the system. The medical AI revolution has arrived and several surveys amongst radiologists have found that 84% of radiology clinics in the US have accepted the use of robot radiologist in the clinical setting.

Disclaimer

The information presented in this article is for general educational and informational purposes only. It reflects the research and views available at the time of writing and should not be construed as professional medical advice, diagnosis, or treatment. While the article discusses the applications of Artificial Intelligence (AI) and machine learning in medical imaging and diagnostics, it does not substitute for consultation with qualified healthcare professionals.

Open Medscience makes no representations or warranties regarding the accuracy, reliability, or completeness of the content provided. Readers should be aware that the development and use of AI in medicine is a rapidly evolving field, and some details may become outdated over time.

The technologies, systems, and clinical examples mentioned are referenced for illustrative purposes and do not imply endorsement or certification by Open Medscience. Implementation of such systems should only be undertaken by appropriately trained professionals and in accordance with local regulations and standards of care.

Any references to products, organisations, or services do not constitute or imply an endorsement or recommendation. Open Medscience shall not be held liable for any loss, injury, or damage resulting from reliance on the content of this article.

Always seek the advice of your physician or other qualified health provider with any questions you may have regarding a medical condition.

You are here: home » diagnostic medical imaging blog »